Spring Batch is a framework within the larger Spring ecosystem that provides tools and conventions for building and running batch-processing applications. It is beneficial for handling large-scale, data-intensive batch jobs, such as ETL (Extract, Transform, Load) processes, data import/export, and more.

Key Components and Concept of Spring-Batch

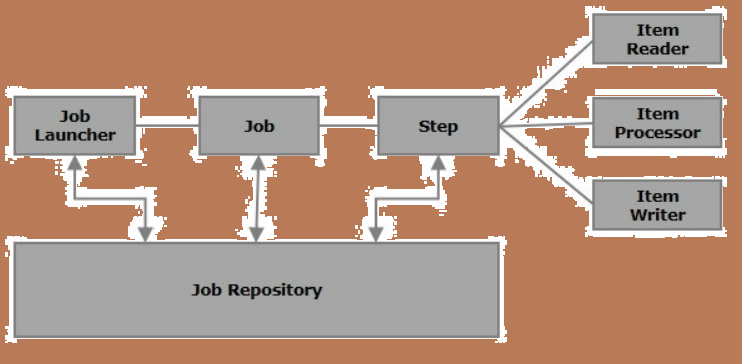

- Job: A job is the highest-level concept in Spring Batch. It represents a complete batch process and can consist of one or more steps. A job defines the overall flow of processing.

- Steps: A step is a fundamental unit of work in a batch job. A job can contain one or more steps, and each step typically performs a specific task like reading data, processing it, and writing the results. Steps can be executed sequentially or in parallel.

- ItemReader: An ItemReader is responsible for reading data from a datasource. It provides a way to retrieve data in chunks (called “chunks” or “batches”) to optimize memory usage and performance. Spring Batch provides various built-

in ItemReader implementations for reading datafrom databases, flatfiles, XML, and many more. - ItemProcessor: An ItemProcessor is used to transform or process each item read by the ItemReader You can define custom business logic here, such as data validation, filtering, or enrichment. Processed items are typically passed to the ItemWriter for further processing.

- ItemWriter: An ItemWriter is responsible for writing processed items to an output destination, such as a database, file, or message queue. Spring Batch offers various ItemWriter implementations for different output formats and destinations.

- JobRepository: The JobRepository is a critical component that manages metadata about job and step executions. It stores information about job instances, their statuses, and step executions. By default, Spring Batch uses a relational database to store this metadata, but you can customize it to use other storage mechanisms.

- JobLauncher: The JobLauncher is responsible for starting and launching job executions. It receives a job and optional parameters, creates a job execution instance, and manages the execution lifecycle.

- Listeners: Spring Batch allows you to attach listeners to various lifecycle events during job and step execution. You can implement custom listeners to perform actions before or after certain events, such as before a step starts or after it is completed.

- Chunk Processing: Spring Batch uses a chunk-oriented processing model, where data is read, processed, and written in chunks. This approach is well-suited for handling large datasets efficiently without consuming excessive memory.

- Retry & Skip: Spring Batch provides mechanisms for handling errors during batch processing. You can configure retry logic to retry failed items and skip items that cannot be processed.

- Partitioning: For parallel processing of large datasets, Spring Batch supports partitioning. It allows you to split a job into smaller sub-jobs that can be executed concurrently on multiple threads or even on different machines.

- Scheduling: You can schedule batch jobs to run at specific intervals or times using schedulers like Quartz or by leveraging Spring’s built-in scheduling capabilities.

Basic structure for Spring-Batch

Here’s a brief explanation of Spring Batch along with some code examples:

- Configuration: Spring Batch is configured using XML or Java configuration Using Spring Beans, you can define jobs, steps, readers, processors, and writers.

- Create a Spring Project: Start by creating a new Spring project or adding a spring batch to an existing project. Maven or Gradle as build tools to manage dependencies, can be used.

- Add Spring Batch Dependencies: In the project’s build configuration (pom.xml for Maven), include the necessary Spring Batch dependencies. For a basic setup, we will need at least Spring-Batch-Core and other related dependencies. Check the Spring Batch documentation for the latest versions. For example, dependencies to include spring batch processing are given below

- Create a Batch Configuration: Define a Java Configuration class and annotate it with @Configurationand @EnableBatchProcessing. In this class, configure the Spring Batch job, Steps, Readers, Processors, and Writers. In the snippet given below, the implementation procedure is shown.

123456789<dependencies><!-- Spring Batch Core --><dependency><groupId>org.springframework.batch</groupId><artifactId>spring-batch-core</artifactId><version>${spring-batch.version}</version></dependency><!-- Other dependencies as needed --></dependencies>1234567891011121314151617181920212223242526272829303132333435363738394041424344454647484950515253@Configuration@EnableBatchProcessingpublic class BatchConfig {@Autowiredprivate JobBuilderFactory jobBuilderFactory;@Autowiredprivate StepBuilderFactory stepBuilderFactory;@Beanpublic ItemReader<String> reader() {return new FlatFileItemReaderBuilder<String>().name("itemReader").resource(new ClassPathResource("data.csv")).lineMapper(new DefaultLineMapper<>()).delimited().names(new String[]{"data"}).targetType(String.class).build();}@Beanpublic ItemProcessor<String, String> processor() {return item -> item.toUpperCase();}@Beanpublic ItemWriter<String> writer() {return items -> {for (String item : items) {System.out.println("Writing item: " + item);}};}@Beanpublic Step step() {return stepBuilderFactory.get("step").<String, String>chunk(10).reader(reader()).processor(processor()).writer(writer()).build();}@Beanpublic Job job() {return jobBuilderFactory.get("job").incrementer(new RunIdIncrementer()).start(step()).build();}} - Job Configuration: In the code above, we configure a Spring Batch job that reads data from a CSV file, processes it by converting each item to uppercase, and then writes the processed data to the console.

- Job Execution: To run the batch job, you can use Spring’s JobLauncher:

12345public class BatchApplication {public static void main(String[] args) {SpringApplication.run(BatchApplication.class, args);}}

- Batch Metadata: Spring Batch also provides a way to store batch job

metadata, which is helpful for tracking and managing the execution of jobs.- This configuration sets up a job repository that stores metadata in a

PostgreSQL database.

12345<bean id="jobRepository" class="org.springframework.batch.core.repository.support.JobRepositoryFactoryBean"><property name="dataSource" ref="dataSource"/><property name="transactionManager" ref="transactionManager"/><property name="databaseType" value="POSTGRES"/></bean> - This configuration sets up a job repository that stores metadata in a

- Execution: You can execute the job from the command line or trigger it programmatically using the JobLauncher. For example:

- This code launches the batch job with specific parameters.

123456789101112131415161718@Autowiredprivate JobLauncher jobLauncher;@Autowiredprivate Job job;public void runBatchJob() throws Exception {JobParameters jobParameters = new JobParametersBuilder().addString("jobId", String.valueOf(System.currentTimeMillis())).jobParameters();JobExecution jobExecution = jobLauncher.run(job, jobParameters);System.out.println("Job Status : " + jobExecution.getStatus());}

Conclusion:

Spring-Batch is a powerful framework for building batch-processing applications in Java. It provides a structured and scalable approach to handling batch jobs, making it easier to develop, test, and maintain complex data processing tasks. Whether you need to perform data migration, generate reports, or automate routine tasks, Spring Batch has you covered.

Overall, Spring-Batch is a valuable tool for industries and applications where batch processing is essential, offering reliability, efficiency, and maintainability for handling complex data processing tasks.

bluethinkinc_blog

2023-09-11